On-Demand Human Preference Data for AI Training

In a previous blog post and paper we presented a benchmark for evaluating generative text-to-image models based on a collected large scale preference dataset consisting of more than 2 million responses from real humans. This large dataset was acquired in just a few days using Rapidata’s unique platform, and in this post we will show how you can easily set up and run the annotation process to collect a huge preference dataset yourself.

The Data

The preference dataset is made up of a large amount of pairwise comparisons between images generated from different models. For this demo, you can download a small dataset of images generated using Flux.1 [pro] and Stable Diffusion 3 Medium. The dataset is available on our huggingface page. The dataset contains the relevant images (images.zip) as well as a csv-file defining the matchups (matchups.csv).

Configuring and Starting the Annotation Process

For the annotation setup we will utilize the Rapidata API which can be easily used through our python package. To install the package run

pip install rapidataIf you are interested in learning more about the package, take a closer look at the documentation. However, this is not needed to follow along with this guide.

- As a first step, import the necessary packages and create the client object that will be used in configuring the rest of the setup. When running this code for the first time it will open up a browser window, prompting you to log in. After successfully logging in, it will then save the credentials to

~/.config/rapidata/credentials.jsonfor future use.

import os

from rapidata import RapidataClient

import pandas as pd

from datasets import load_dataset

client = RapidataClient()- Now, let us import the data from the dataset. The csv is neatly formatted with each of the image pairs and their respective prompts, making this straightforward. The images and prompts are saved to later be used in the order. For demonstration purposes, I sample a subset of the pairs. Based on the settings presented in step 3, this should allow the necessary amount of responses to be collected in less than ten minutes. If you do not mind waiting, feel free to include more pairs.

example_csv_path = "/path/to/matchups.csv"

media_path = "/path/to/images"

df = load_dataset("Rapidata/image-preference-demo", split="test").to_pandas()

media = []

prompts = []

for index, row in df.sample(40).iterrows():

media.append([os.path.join(media_path, row["image1"]), os.path.join(media_path, row["image2"])])

prompts.append(row["prompt"])- The next step is to create the order using our API. In this case we specify the number of responses we want to be 15, and are adding a validation set, which ensures that the labelers understand the question. We have prepared a predefined validation set for this specific task, however these can also be customized if needed. Consult the documentation or reach out for more information in this regard.

order = client.order.create_compare_order(

name="Benchmark Demo",

instruction="Which image fits the description better?",

datapoints=media,

contexts=prompts,

responses_per_datapoint=15,

validation_set_id="66d0e7ea8c33f56b460ea91f",

)- So far we have not consulted any humans, by calling the

.run()method on the order, we will start the annotation process.

order.run()

order.display_progress_bar() # Optional, to display a progress barFetching the Results

Once the order has finished, you can easily get the results through the order object by calling the .get_results() method.

results = order.get_results()If the kernel has been restarted, you can find the order object again using the client.order.find_orders() method.

client = RapidataClient()order = client.order.find_orders(name="Benchmark Demo")[0] # Returns the most recent order with the name "Benchmark Demo"

results = order.get_results()Analyzing the Results

The raw results come as a json object, however for analysis purposes we can extract it to a pandas dataframe using this utility function.

Expand to see utility function, get_df_from_results()

def get_df_from_results(results):

res = []

for r in results["results"]:

prompt = r["context"]

items = list(r['aggregatedResults'].items())

# Extract flux data (first item)

flux_image = items[0][0]

flux_votes = items[0][1]

# Extract stable diffusion data (second item)

sd_image = items[1][0]

sd_votes = items[1][1]

res.append({"prompt": prompt, "image_flux": flux_image, "image_stable_diffusion": sd_image,

'flux': flux_votes, 'stable_diffusion': sd_votes})

df = pd.DataFrame(res)

# get the ratio of votes, it should always be the highest, so not always flux/stable_diffusion, sometimes stable_diffusion/flux

df['ratio'] = df[['flux', 'stable_diffusion']].max(axis=1) / df[['flux', 'stable_diffusion']].sum(axis=1)

# sort by ratio

df = df.sort_values('ratio', ascending=False)

return dfresults_df = get_df_from_results(results)To find a winner between the two model, we e.g., look at which model got the most votes.

def get_votes_per_model(df):

return df[['flux', 'stable_diffusion']].sum()

votes_per_model = get_votes_per_model(results_df)

print(votes_per_model)Visualization

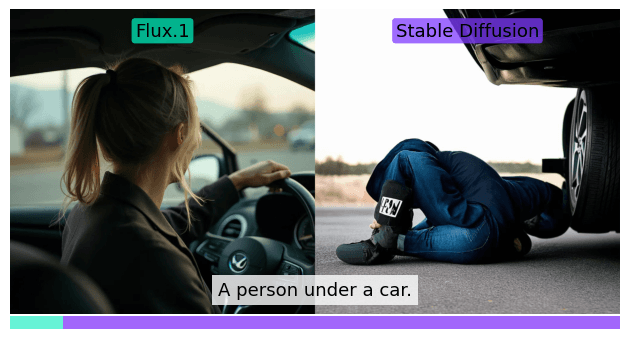

The following function provides a simple visualization of the individual matchups and the votes they received, similar to the image shown below.

Expand to see utility function, plot_image_comparison()

from matplotlib import pyplot as plt

import numpy as np

def plot_image_comparison(prompt, image1_path, image2_path, votes1, votes2):

# Create figure and axes

fig, (ax_images, ax_bar) = plt.subplots(2, 1, gridspec_kw={'height_ratios': [24, 1], 'hspace': 0})

# Load images

img1 = plt.imread(image1_path)

img2 = plt.imread(image2_path)

# Display images side by side

ax_images.imshow(np.hstack((img1, img2)))

text_settings = {

'horizontalalignment': 'center',

'verticalalignment': 'bottom',

'transform': ax_images.transAxes,

'fontsize': 13,

'wrap': True

}

bbox_settings = {

'alpha': 0.75,

'edgecolor': 'none',

'boxstyle': 'round,pad=0.2' # This adds rounded corners

}

# add the names of the models, Flux.1 and Stable Diffusion as a text on top of the images

ax_images.text(0.25, 0.9, 'Flux.1', **text_settings, bbox=dict(facecolor='#00ecbb', **bbox_settings))

ax_images.text(0.75, 0.9, 'Stable Diffusion', **text_settings, bbox=dict(facecolor='#803bff', **bbox_settings))

txt = ax_images.text(0.5, 0.05, prompt,

horizontalalignment='center',

verticalalignment='bottom',

transform=ax_images.transAxes,

fontsize=13,

bbox=dict(facecolor='white', alpha=0.8, edgecolor='none'),

wrap=True)

txt._get_wrap_line_width = lambda : 525

ax_images.axis('off')

# Calculate vote percentages

total_votes = votes1 + votes2

percent1 = votes1 / total_votes * 100

percent2 = votes2 / total_votes * 100

# Create horizontal bar for votes

ax_bar.barh(y=0, width=percent1, height=0.5, align='center', color='#00ecbb', alpha=0.6)

ax_bar.barh(y=0, width=percent2, height=0.5, align='center', color='#6400f9', alpha=0.6, left=percent1)

# Configure bar axis

ax_bar.set_xlim(0, 100)

ax_bar.set_ylim(-0.25, 0.25)

ax_bar.axis('off') # Remove all axes

# Adjust layout and reduce space between subplots

plt.tight_layout()

plt.subplots_adjust(top=0.67, bottom=0.0)

plt.show()for i, row in results_df[:5].iterrows():

if isinstance(row['image_flux'],str) and isinstance(row['image_stable_diffusion'],str):

plot_image_comparison(row['prompt'],

os.path.join(media_path, row['image_flux']),

os.path.join(media_path, row['image_stable_diffusion']),

row['flux'], row['stable_diffusion'])Conclusion

Through this blog post you have seen how easily you can start collecting preference data from real humans through the Rapidata API with just a few lines of code. This guide serves as a starting point and now you are ready to customize the setup to your specific needs. If you have any questions or need help, feel free to reach out to us at info@rapidata.ai.